Over the last year, Generative AI has become a popular tool for creating various forms of content, including text, images, and audio. Many developers are now exploring how to incorporate these systems into their applications to benefit their users.

Despite the rapid advancement of technology and the constant release of new models and SDKs, it can be difficult for developers to know where to begin. While there are many polished end-to-end sample applications available for .NET developers to use as a reference, some may prefer to build their applications incrementally, starting with the basics and gradually adding more advanced features.

- 1. Building a console-based .NET chat app with solutions like Semantic Kernel

- 2. How to get started with Semantic Kernel SDK. Learn how to use it

- 3. Leveraging Semantic functions: reusable prompts, dynamic input handling, and plugins

- 4. Does LLM have memory? How to use Semantic Kernel to overcome statelessness in chat agents

- 5. Does LLM know everything? Connectors and RAG with Semantic plugins and native functions

- 6. Storing memories

- 7. Summary

Building a console-based .NET chat app with solutions like Semantic Kernel

This post aims to guide developers in building a simple console-based .NET chat application from scratch, with minimal dependencies and fuss. The ultimate goal is to create an application that can answer questions based on both the data used to train the model and additional data provided dynamically. Each code sample provided in this post is a complete application, allowing developers to easily copy, paste, and run the code, experiment with it, and then incorporate it into their own applications for further refinement and customization.

How to get started with Semantic Kernel SDK. Learn how to use it

To begin, make sure you have .NET 8 installed, and create a simple console app.dotnet

new console -o chat-sample-app-01 --use-program-main cd chat-sample-app-01

This creates a new directory chat-sample-app-01 and populates it with two files: chat-sample-app-01.csproj and Program.cs. We then need to bring in one NuGet package: Microsoft.SemanticKernel.

dotnet add package Microsoft.SemanticKernel

Find out more at Microsoft Learn

Planning, orchestration, and multiple plugins

Instead of referencing specific AI-related packages such as Azure.AI.OpenAI, I have opted for an open source Semantic Kernel kit to streamline various interactions and easily switch between different implementations for faster experimentation. Semantic Kernel offers a collection of libraries that simplify working with Large Language Models (LLMs) by providing abstractions for various AI concepts, allowing for the easy substitution of different implementations. It also includes many concrete implementations of these abstractions, wrapping numerous other SDKs, and offers support for planning, orchestration, and multiple plugins. This post will explore various aspects of Semantic Kernel, but my primary focus is on its abstractions.

While I have tried to keep dependencies to a minimum for the purpose of this article, there is one more I cannot avoid: you need access to an LLM. The easiest way to get access is via either OpenAI or Azure OpenAI. For this post, I am using Azure OpenAI. You will need three pieces of information for the remainder of the post:

- Your API key and endpoint provided to you in the Azure portal

- A chat model, or to be more precise, the deployment name of your model. I use GPT-4-32k (0613), which as of this writing has a context window of 32K tokens. I’ll explain more about what it is later.

- An embedding model. I use text-embedding-3-large.

Let’s make it as easy as possible

With that out of the way, we can dive in. Believe it or not, we can create a simple chat app in just a few lines of code. Copy and paste this into your Program.cs:

using Microsoft.SemanticKernel;

namespace chatSampleApp01

{

class Program

{

static string deploymentName = Environment.GetEnvironmentVariable("AI:OpenAI:DeploymentName")!;

static string endpoint = Environment.GetEnvironmentVariable("AI:OpenAI:Endpoint")!;

static string apiKey = Environment.GetEnvironmentVariable("AI:OpenAI:APIKey")!;

static async Task Main(string[] args)

{

//Initialize Semantic Kernel

var builder = Kernel.CreateBuilder();

builder.AddAzureOpenAIChatCompletion(deploymentName, endpoint, apiKey);

var kernel = builder.Build();

//Question and Answer loop

string question;

while (true)

{

Console.Write("Me: ");

question = Console.ReadLine()!;

Console.Write("Mine Copilot: ");

Console.WriteLine(await kernel.InvokePromptAsync(question));

Console.WriteLine();

}

}

}

}

To prevent accidentally revealing my API key, which should be safeguarded like a password, I have stored it in an environment variable and accessed it using GetEnvironmentVariable. Then I created a new kernel using the Semantic Kernel APIs and added an OpenAI chat completion service to it. The Microsoft.SemanticKernel package we imported earlier includes references to client support for both OpenAI and Azure OpenAI, eliminating the need for additional components to communicate with these services. With this configuration, we can now run our chat app using dotnet run, enter questions, and receive responses from the service.

The expression await kernel.InvokePromptAsync(question) is the core of the interaction with the LLM, where it captures the user’s input and sends it to the LLM, receiving a string response in return. Semantic Kernel is equipped to handle various function types, including prompt functions for text-based AI interactions and standard .NET methods capable of executing any C# code. These functions can be triggered directly by the user, as shown in this example, or as part of a “plan” where a set of functions is provided to the LLM to formulate a strategy to achieve a specified objective. Semantic Kernel can execute these functions as per the plan (I will show it later). Additionally, some models support a “function calling” feature, which is also simplified by Semantic Kernel.

Leveraging Semantic functions: reusable prompts, dynamic input handling, and plugins

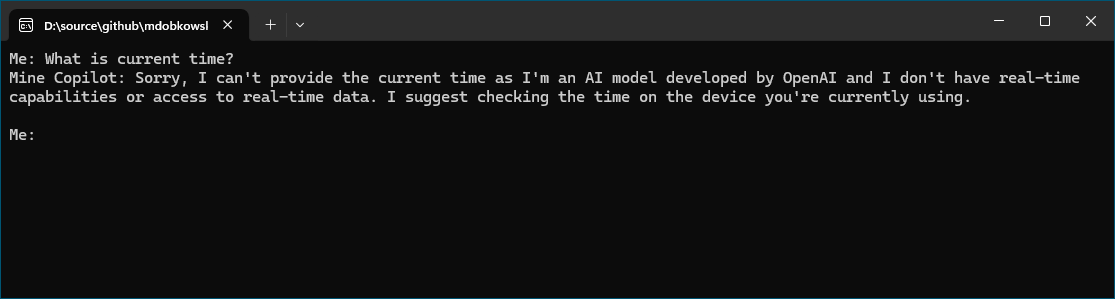

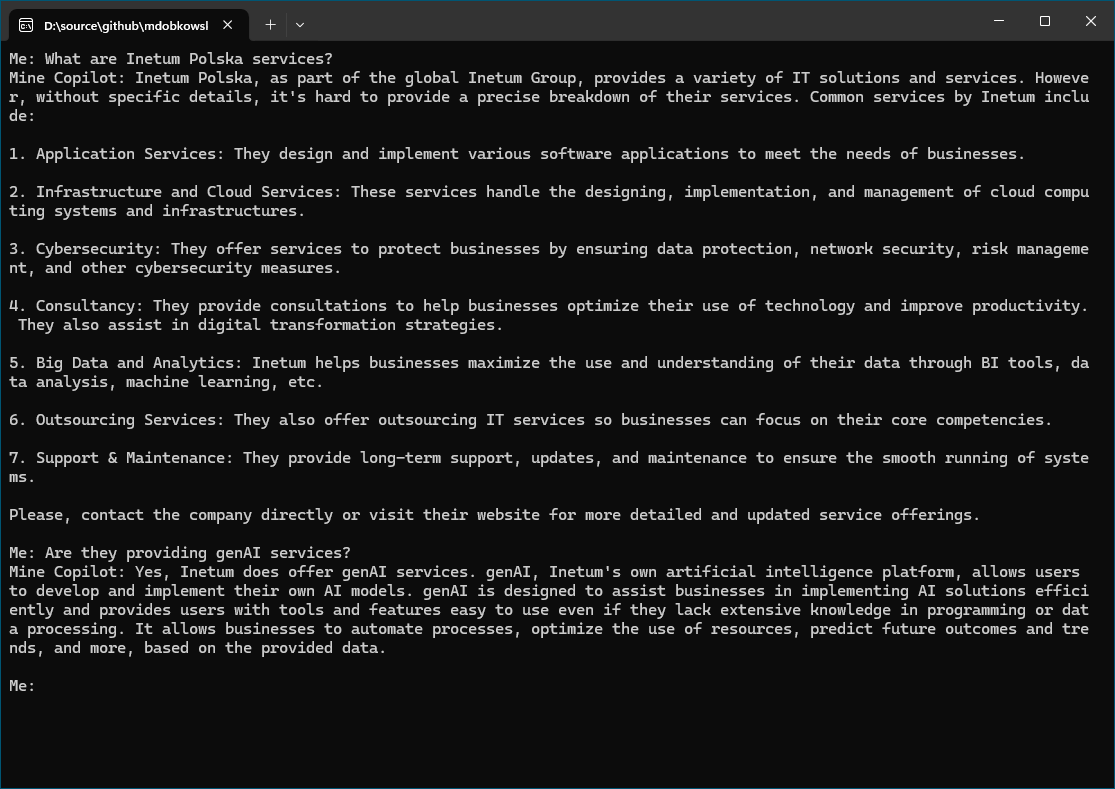

In this instance, “function” refers to the user’s input, such as the question “What is Inetum Polska?” which is then processed by the LLM through the InvokePromptAsync method. To clarify the concept of “function,” we can extract it into a separate entity using the `CreateFunctionFromPrompt“ method, allowing us to reuse the same function for multiple inputs. This approach eliminates the need to create a new function for each input, but requires a way to incorporate the user’s input into the existing function. Semantic Kernel supports this through prompt templates, which include placeholders that are filled with the appropriate variables and functions. For example, if the sample is run again with a request for the current time, the LLM will not be able to provide an answer:

To anticipate such inquiries, we can equip the LLM with the necessary information within the prompt itself. I have registered a function with the kernel that provides the current date and time. Subsequently, I created a prompt function that utilizes a prompt template to invoke this time function during the prompt’s rendering. This template also incorporates the value of the $input variable. It is possible to pass any number of arguments with arbitrary names using a KernelArguments dictionary; in this case, I have chosen to name one “input”. Functions are organized into collections known as “plugins”.

using Microsoft.SemanticKernel;

namespace chatSampleApp02

{

class Program

{

static string deploymentName = Environment.GetEnvironmentVariable("AI:OpenAI:DeploymentName")!;

static string endpoint = Environment.GetEnvironmentVariable("AI:OpenAI:Endpoint")!;

static string apiKey = Environment.GetEnvironmentVariable("AI:OpenAI:APIKey")!;

static async Task Main(string[] args)

{

//Initialize Semantic Kernel

var builder = Kernel.CreateBuilder();

builder.AddAzureOpenAIChatCompletion(deploymentName, endpoint, apiKey);

// Create the prompt function as part of a plugin and add it to the kernel.

builder.Plugins.AddFromFunctions(

pluginName: "DateTimeHelpers",

functions: [

KernelFunctionFactory.CreateFromMethod(

()=> $"{DateTime.UtcNow:r}", "Now", "Gets the current date and time"

)

]);

var kernel = builder.Build();

var kernelFunction = KernelFunctionFactory.CreateFromPrompt(

promptTemplate: @"

The current date and time is {{ datetimehelpers.now }}.

{{ $input }}"

);

//Question and Answer loop

string question;

while (true)

{

Console.Write("Me: ");

question = Console.ReadLine()!;

Console.Write("Mine Copilot: ");

Console.WriteLine(await kernelFunction.InvokeAsync(kernel, new() { ["input"] = question }));

Console.WriteLine();

}

}

}

}

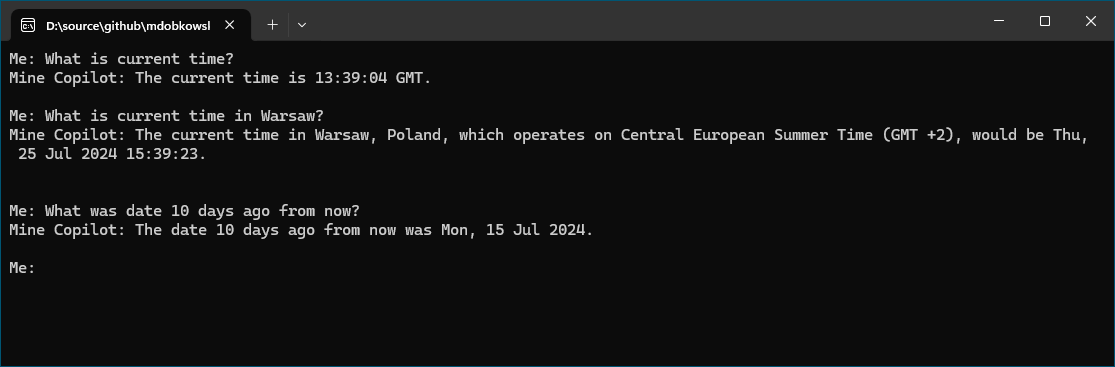

When the function is activated, it renders the prompt by calling the previously registered ‘Now’ function and integrating its output into the prompt. Now, posing the same question yields a more comprehensive answer.

Does LLM have memory? How to use Semantic Kernel to overcome statelessness in chat agents

We have made significant strides: with just a few lines of code, we have crafted a basic chat agent that can field repeated questions and provide responses. Moreover, we have managed to furnish it with extra prompt information to aid in answering questions it would otherwise be unable to tackle. Yet, in doing so, we have also fashioned a chat agent devoid of memory, lacking any awareness of prior conversations:

To remedy the statelessness of LLMs and their lack of memory, we must maintain a record of our chat history and incorporate it into each prompt request. This can be done manually by integrating the chat history into the prompt, or we can rely on Semantic Kernel to handle it for us, which in turn can depend on the clients for Azure OpenAI, OpenAI, or any other chat service. The latter approach involves using the registered IChatCompletionService to create a new chat, which is essentially a compilation of all messages. This method not only processes requests and outputs responses but also archives them into the chat history.

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

namespace chatSampleApp03

{

class Program

{

static string deploymentName = Environment.GetEnvironmentVariable("AI:OpenAI:DeploymentName")!;

static string endpoint = Environment.GetEnvironmentVariable("AI:OpenAI:Endpoint")!;

static string apiKey = Environment.GetEnvironmentVariable("AI:OpenAI:APIKey")!;

static async Task Main(string[] args)

{

//Initialize Semantic Kernel

var builder = Kernel.CreateBuilder();

builder.AddAzureOpenAIChatCompletion(deploymentName, endpoint, apiKey);

var kernel = builder.Build();

//Create new chat

var chatService = kernel.GetRequiredService<IChatCompletionService>();

var chat = new ChatHistory(

systemMessage: "You are an AI assistant that helps people find information."

);

//Question and Answer loop

string question;

while (true)

{

Console.Write("Me: ");

question = Console.ReadLine()!;

chat.AddUserMessage(question);

Console.Write("Mine Copilot: ");

var answer = await chatService.GetChatMessageContentAsync(chat);

chat.AddAssistantMessage(answer.Content!);

Console.WriteLine(answer);

Console.WriteLine();

}

}

}

}

With that chat history rendered into an appropriate prompt, we then get back much more satisfying results:

In a practical application, it is crucial to consider various additional factors, such as the data processing limitations of language models, referred to as the “context window.” The `GPT-4-32k (0613) model that I am using here can handle ~32000 tokens, where a token can be a full word, part of a word, or a single character. Additionally, each token incurs a cost for every interaction. Therefore, when transitioning from a trial phase to full production, it becomes essential to monitor the chat history’s data volume closely and manage it by removing unnecessary parts, etc.

We can enhance the user experience by adding a small segment of code that accelerates the interaction. These large language models (LLMs) generate responses by predicting the next token, so while we have been displaying the complete response once it is fully generated, we can actually present it in real time as it is being formulated. This functionality is available in Semantic Kernel through IAsyncEnumerable, which allows for convenient integration using await foreach loops to stream the response incrementally.

Does LLM know everything? Connectors and RAG with Semantic plugins and native functions

We have now reached a point where we can pose questions and receive answers, maintain a record of these exchanges to refine future responses, and even broadcast our findings. But is our work complete? Not quite.

As it stands, the only information available to LLM for providing answers is the data it was initially trained on, plus any additional information we explicitly include in the prompt (like

the current time, as previously mentioned). Consequently, if we inquire about topics outside the LLM’s training or areas where its knowledge is lacking, the responses we receive may be unhelpful, misleading, or entirely incorrect, which are often referred to as ‘hallucinations’.

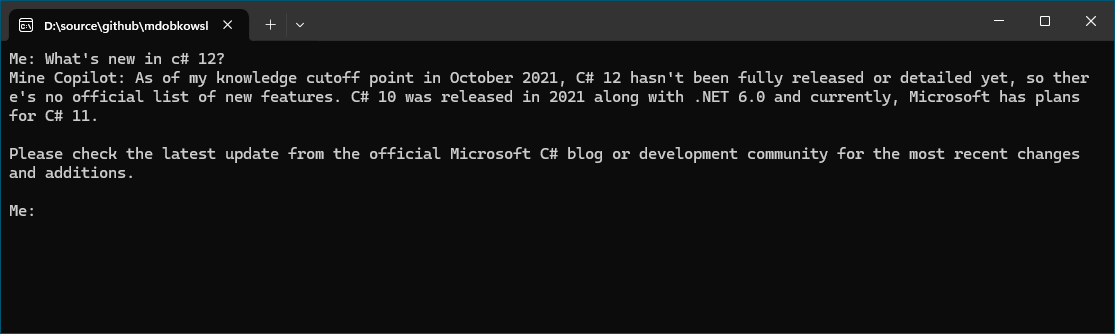

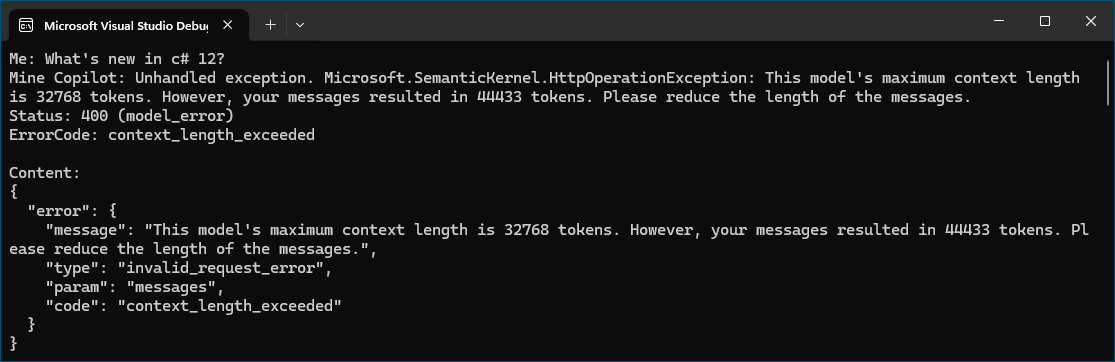

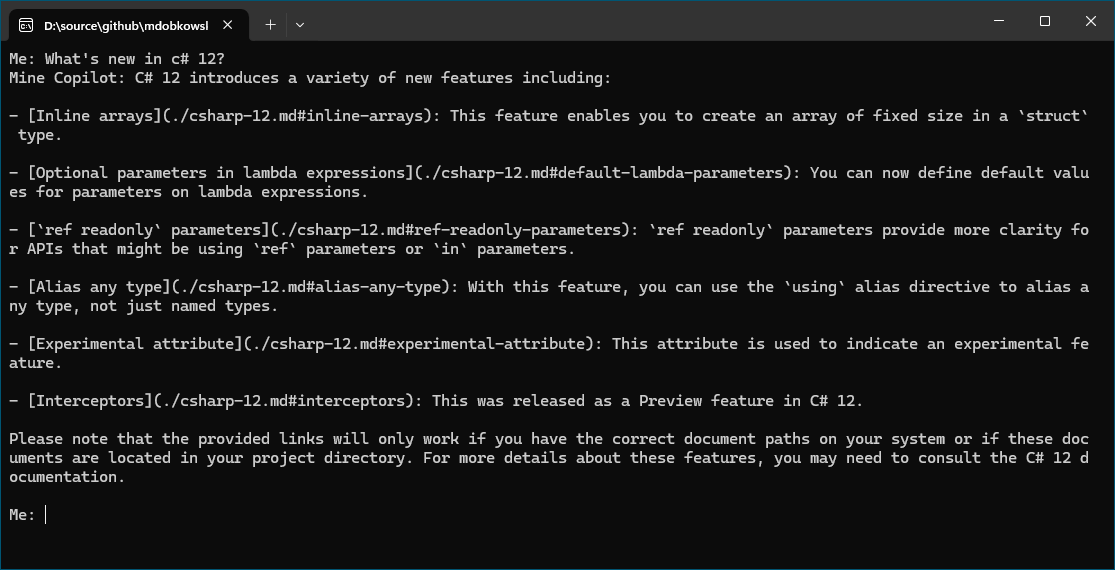

The question is about the latest C# 12 changes, which were released after this version of the GPT-4-32k (0613) model was released (November 2023 vs October 2021). The model has no information about the newest capabilities, so it does not give a reasonable answer to the first question. We need to find a way to teach it about the things the user is asking about.

We know the way to teach LLM: include the necessary information in the prompt. For instance, the Microsoft Learn articles:

- Relationships between language features and library types

- Version and update considerations for C# developers

- The history of C#

- What’s new in C# 11

- Breaking changes in Roslyn after .NET 6 all the way to .NET 7

- What’s new in C# 12

- Breaking changes in Roslyn after .NET 7 all the way to .NET 8

- What’s new in C# 13

- Breaking changes in Roslyn after .NET 8 all the way to .NET 9

- What’s new in .NET 8

- What’s new in the .NET 8 runtime

- What’s new in the SDK and tooling for .NET 8

- What’s new in containers for .NET 8

which were published after the training of this GPT-4-32k (0613) model have a detailed section regarding the new capabilities of C# 12. By incorporating this content into the prompt, we can supply the LLM with the required knowledge. In the following example, I have expanded the previous code to download the web page content and then insert it into a user message.

This approach ensures that the LLM is provided with the latest information to assist with the query.

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using System.Text;

namespace chatSampleApp05

{

internal class Program

{

static string deploymentName = Environment.GetEnvironmentVariable("AI:OpenAI:DeploymentName")!;

static string endpoint = Environment.GetEnvironmentVariable("AI:OpenAI:Endpoint")!;

static string apiKey = Environment.GetEnvironmentVariable("AI:OpenAI:APIKey")!;

static async Task Main(string[] args)

{

//Initialize Semantic Kernel

var builder = Kernel.CreateBuilder();

builder.AddAzureOpenAIChatCompletion(deploymentName, endpoint, apiKey);

var kernel = builder.Build();

//Create new chat

var chatService = kernel.GetRequiredService<IChatCompletionService>();

var chat = new ChatHistory(

systemMessage: "You are an AI assistant that helps people find information."

);

// Download a documents and add all of its contents to our chat

var articleList = new List<string>

{

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/relationships-between-language-and-library.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/version-update-considerations.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-version-history.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-11.md",

"https://raw.githubusercontent.com/dotnet/roslyn/main/docs/compilers/CSharp/Compiler%20Breaking%20Changes%20-%20DotNet%207.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-12.md",

"https://raw.githubusercontent.com/dotnet/roslyn/main/docs/compilers/CSharp/Compiler%20Breaking%20Changes%20-%20DotNet%208.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-13.md",

"https://raw.githubusercontent.com/dotnet/roslyn/main/docs/compilers/CSharp/Compiler%20Breaking%20Changes%20-%20DotNet%209.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/overview.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/runtime.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/sdk.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/containers.md"

};

var articleStringBuilder = new StringBuilder();

using (var httpClient = new HttpClient())

{

foreach (var article in articleList) {

articleStringBuilder.Append(await httpClient.GetStringAsync(article));

}

chat.AddUserMessage($"Here's some additional information: {articleStringBuilder.ToString()}");

}

string question;

StringBuilder stringBuilder = new StringBuilder();

//Question and Answer loop

while (true)

{

Console.Write("Me: ");

question = Console.ReadLine()!;

chat.AddUserMessage(question);

stringBuilder.Clear();

Console.Write("Mine Copilot: ");

await foreach (var message in chatService.GetStreamingChatMessageContentsAsync(chat))

{

Console.Write(message);

stringBuilder.Append(message.Content);

}

Console.WriteLine();

chat.AddAssistantMessage(stringBuilder.ToString());

Console.WriteLine();

}

}

}

}

and the result?

We have gone over the context window almost twice without adding any history to the conversation. We obviously need to include less information, but still need to ensure it is relevant information. RAG will help us…

Interested in building AI-powered apps? Connect with Marek Dobkowski to explore Microsoft solutions that simplify your workflow and enhance productivity. Schedule a meeting |

Using RAG

Retrieval Augmented Generation (RAG) essentially means looking up relevant information and incorporating it into the prompt. Instead of including all possible information in the prompt, we index the additional information we care about. When a question is asked, we use that question to find the most relevant indexed content and add just that specific content to the prompt. To facilitate this process, we need embeddings.

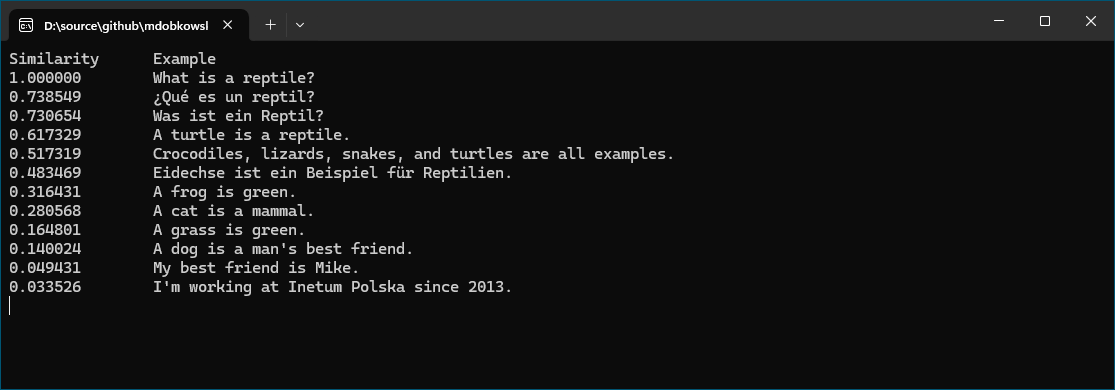

An embedding can be thought of as a vector (array) of floating-point values that represents the content and its semantic meaning. We can use a model specifically designed for embeddings to generate such a vector for a given input, and then store both the vector and the original text in a database. Later, when a question is posed, we can process that question through the same model to produce a vector, which we then use to find the most relevant embeddings in our database. We are not necessarily looking for exact matches, but rather for sufficiently similar ones. The term ‘close’ here is quite literal, as the lookups are typically performed using distance measures like cosine similarity. For instance, consider this program:

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Embeddings;

using System.Numerics.Tensors;

namespace chatSampleApp06

{

internal class Program

{

static string embeddingDeploymentName = Environment.GetEnvironmentVariable("AI:OpenAI:EmbeddingDeploymentName")!;

static string endpoint = Environment.GetEnvironmentVariable("AI:OpenAI:Endpoint")!;

static string apiKey = Environment.GetEnvironmentVariable("AI:OpenAI:APIKey")!;

static async Task Main(string[] args)

{

//Experimental

#pragma warning disable SKEXP0010,SKEXP0001

//Initialize Semantic Kernel

var builder = Kernel.CreateBuilder();

builder.AddAzureOpenAITextEmbeddingGeneration(embeddingDeploymentName, endpoint, apiKey);

var kernel = builder.Build();

var input = "What is a reptile?";

var examples = new string[]

{

"What is a reptile?",

"¿Qué es un reptil?",

"Was ist ein Reptil?",

"A turtle is a reptile.",

"Eidechse ist ein Beispiel für Reptilien.",

"Crocodiles, lizards, snakes, and turtles are all examples.",

"A frog is green.",

"A grass is green.",

"A cat is a mammal.",

"A dog is a man's best friend.",

"My best friend is Mike.",

"I'm working at Inetum Polska since 2013."

};

// Generate embeddings for each piece of text

var embeddingGenerator = kernel.GetRequiredService<ITextEmbeddingGenerationService>();

var inputEmbedding = (await embeddingGenerator.GenerateEmbeddingsAsync([input])).First();

var exampleEmbeddings = (await embeddingGenerator.GenerateEmbeddingsAsync(examples)).ToArray();

var similarities = new List<Tuple<float, string>>();

// Print the cosine similarity between the input and each example

for (int i = 0; i < exampleEmbeddings.Length; i++)

{

similarities.Add(

new Tuple<float, string>(

TensorPrimitives.CosineSimilarity(exampleEmbeddings[i].Span, inputEmbedding.Span),

examples[i]));

}

similarities.Sort((x,y) => y.Item1.CompareTo(x.Item1));

Console.WriteLine("Similarity\tExample");

foreach (var similarity in similarities) {

Console.WriteLine($"{similarity.Item1:F6}\t{similarity.Item2}");

}

Console.ReadLine();

}

}

}

This process utilizes the AzureOpenAI embedding generation service to obtain an embedding vector (using the text-embedding-3-large model mentioned earlier in the post) for both an input and several other pieces of text. It then compares the resulting embedding for the input with the embeddings of those other texts, sorts the results based on similarity, and prints them out.

Let’s incorporate this concept into the chat app. In this round, I have augmented the previous chat example with a few things:

- In order for Semantic Kernel to handle the embedding generation through its abstractions, we need to include its Memory package. Please note the –prerelease flag, as this is an evolving area. While some Semantic Kernel components are stable, others are still in development and therefore marked as prerelease.

dotnet add package Microsoft.SemanticKernel.Plugins.Memory --prerelease

- Next, I need to create an ISemanticTextMemory for querying. I achieved this by using MemoryBuilder to combine an embeddings generator with a database. I specified the Azure OpenAI service as my embeddings generator using the WithAzureTextEmbeddingGenerationService method. For the store, I registered a VolatileMemoryStore instance using the WithMemoryStore method. Although we will change this later, it will suffice for now. VolatileMemoryStore is essentially an implementation of Semantic Kernel’s IMemoryStore abstraction that wraps an in-memory dictionary.

- I downloaded the text and used Semantic Kernel’s TextChunker to break it into pieces. Then, I saved each piece to the memory store using `SaveInformationAsync“. This process generates an embedding for the text and stores the resulting vector along with the input text in the dictionary.

- When it is time to ask a question, instead of just adding the question to the chat history and submitting it, we first use the question to perform a SearchAsync on the memory store. This generates an embedding vector for the question and searches the store for the closest vectors. I have it return the three closest matches, append the associated text together, add the results to the chat history, and submit it. After submitting the request, I remove this additional context from the chat history to avoid sending it again in subsequent requests, as it can consume much of the allowed context window.

And full source code…

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Logging;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Text;

using System.Net;

using System.Text;

using System.Text.RegularExpressions;

namespace chatSampleApp07

{

internal class Program

{

static string deploymentName = Environment.GetEnvironmentVariable("AI:OpenAI:DeploymentName")!;

static string embeddingDeploymentName = Environment.GetEnvironmentVariable("AI:OpenAI:EmbeddingDeploymentName")!;

static string endpoint = Environment.GetEnvironmentVariable("AI:OpenAI:Endpoint")!;

static string apiKey = Environment.GetEnvironmentVariable("AI:OpenAI:APIKey")!;

static async Task Main(string[] args)

{

//Experimental

#pragma warning disable SKEXP0001, SKEXP0010, SKEXP0050

//Initialize Semantic Kernel

var builder = Kernel.CreateBuilder();

builder.AddAzureOpenAIChatCompletion(deploymentName, endpoint, apiKey);

var kernel = builder.Build();

//Initialize Memory Builder

var memoryBuilder = new MemoryBuilder()

.WithMemoryStore(new VolatileMemoryStore())

.WithAzureOpenAITextEmbeddingGeneration(

deploymentName: embeddingDeploymentName,

endpoint: endpoint,

apiKey: apiKey

);

var memory = memoryBuilder.Build();

// Download a documents and add all of its contents to our chat

var articleList = new List<string>

{

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/relationships-between-language-and-library.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/version-update-considerations.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-version-history.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-11.md",

"https://raw.githubusercontent.com/dotnet/roslyn/main/docs/compilers/CSharp/Compiler%20Breaking%20Changes%20-%20DotNet%207.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-12.md",

"https://raw.githubusercontent.com/dotnet/roslyn/main/docs/compilers/CSharp/Compiler%20Breaking%20Changes%20-%20DotNet%208.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-13.md",

"https://raw.githubusercontent.com/dotnet/roslyn/main/docs/compilers/CSharp/Compiler%20Breaking%20Changes%20-%20DotNet%209.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/overview.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/runtime.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/sdk.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/containers.md"

};

var collectionName = "microsoft-news";

using (var httpClient = new HttpClient())

{

var allParagraphs = new List<string>();

foreach (var article in articleList)

{

var content = await httpClient.GetStringAsync(article);

var lines = TextChunker.SplitPlainTextLines(content, 64);

var paragraphs = TextChunker.SplitPlainTextParagraphs(lines, 512);

allParagraphs.AddRange(paragraphs);

}

for (var i = 0; i < allParagraphs.Count; i++)

{

await memory.SaveInformationAsync(collectionName, allParagraphs[i], $"paragraph[{i}]");

}

}

//Create new chat

var chatService = kernel.GetRequiredService<IChatCompletionService>();

var chat = new ChatHistory(

systemMessage: "You are an AI assistant that helps people find information."

);

string question;

var responseBuilder = new StringBuilder();

var contextBuilder = new StringBuilder();

//Question and Answer loop

while (true)

{

Console.Write("Me: ");

question = Console.ReadLine()!;

await foreach (var result in memory.SearchAsync(collectionName, question, limit: 3))

{

contextBuilder.AppendLine(result.Metadata.Text);

}

var contextToRemove = -1;

if (contextBuilder.Length > 0)

{

contextBuilder.Insert(0, "Here's some additional information: ");

contextToRemove = chat.Count;

chat.AddUserMessage(contextBuilder.ToString());

}

chat.AddUserMessage(question);

responseBuilder.Clear();

Console.Write("Mine Copilot: ");

await foreach (var message in chatService.GetStreamingChatMessageContentsAsync(chat,null, kernel))

{

Console.Write(message);

responseBuilder.Append(message.Content);

}

Console.WriteLine();

chat.AddAssistantMessage(responseBuilder.ToString());

if (contextToRemove >= 0)

{

chat.RemoveAt(contextToRemove);

}

Console.WriteLine();

}

}

}

}

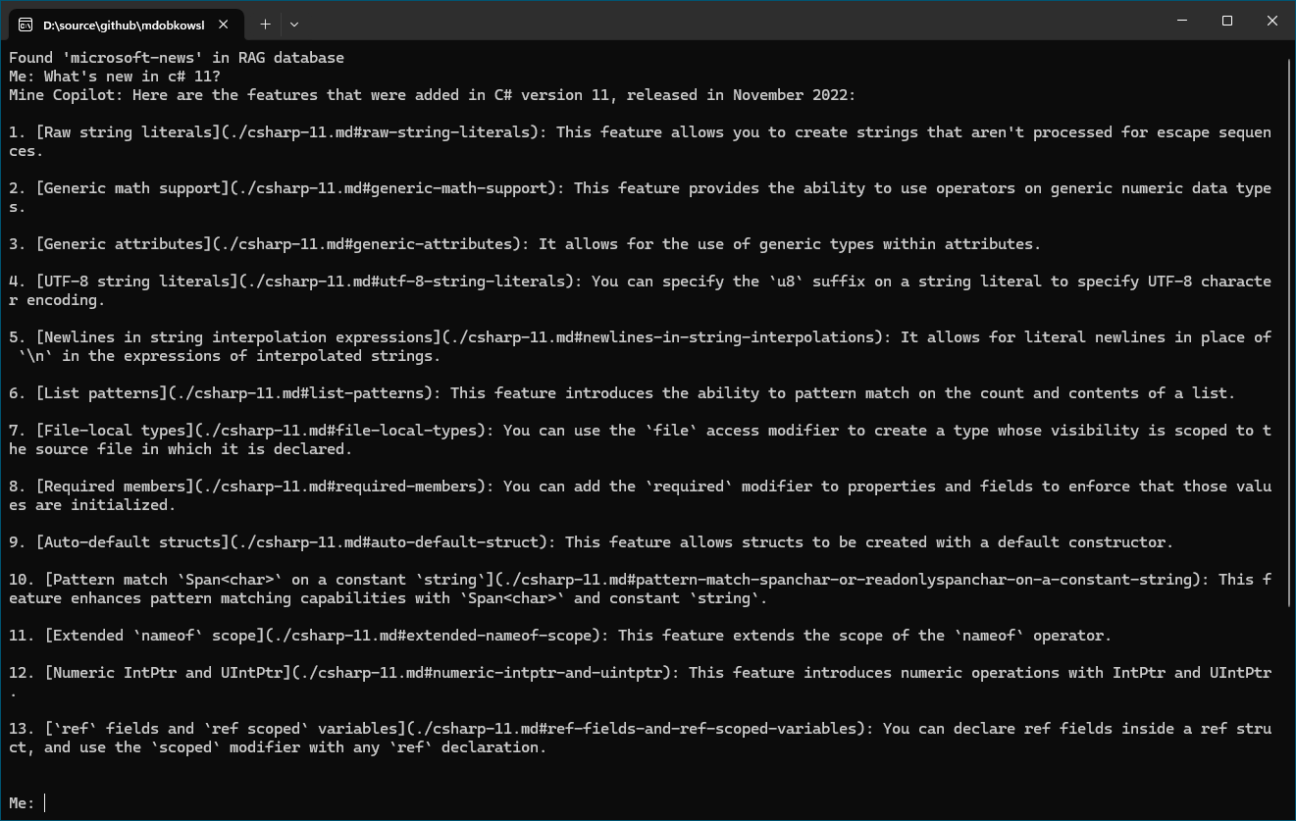

The text chunking code divided the documents into 104 “paragraphs” resulting in 104 embeddings being created and stored in the database. The exciting part is that with all these embeddings, when we pose our question, the database retrieves the most relevant material and adds the additional text to the prompt. Now, when we ask the same questions as before, we receive a much more helpful and accurate response:

Storing memories

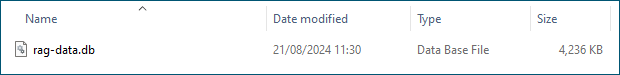

Naturally, we do not want to reindex all documents every time the application starts. Imagine this was a public website facilitating chats with thousands of users and hundreds of documents reindexing all content each time – the application restart process would not only be time-consuming but also unnecessarily expensive. For instance, the Azure OpenAI embedding model I use costs €0.000121 per 1,000 tokens (Azure OpenAI Service pricing), meaning indexing just those documents costs a couple of cents (but remember: “scale makes a difference”).

Therefore, we should switch to using persistent storage. Semantic Kernel provides various IMemoryStore implementations, and we can easily switch to one that persists in the results. For example, let’s switch to one based on Sqlite. To do this, we need another NuGet package:

dotnet add package Microsoft.SemanticKernel.Connectors.Sqlite --prerelease

and with that, we can change just one line of code to switch from the VolatileMemoryStore:

.WithMemoryStore(new VolatileMemoryStore())

to the SqliteMemoryStore:

.WithMemoryStore(await SqliteMemoryStore.ConnectAsync("data\\rag-data.db"))

Sqlite is an embedded SQL database engine that operates within the same process and stores its data in standard disk files. In this case, it will connect to a rag-data.db file, creating it if it does not already exist. However, if we were to run this, we would still end up generating the embeddings again, as our previous example did not include a check to see if the data already existed. Therefore, our final step is to add a guard to prevent this redundant work.

var collectionName = "microsoft-news";

var collections = await memory.GetCollectionsAsync();

if (!collections.Contains(collectionName))

{

... // same code as before to download and process the documents

}

else

{

Console.WriteLine($"Found '{collectionName}' in RAG database");

}

You get the idea. Here is the complete version using Sqlite:

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using Microsoft.SemanticKernel.Connectors.Sqlite;

using Microsoft.SemanticKernel.Memory;

using Microsoft.SemanticKernel.Text;

using System.Text;

namespace chatSampleApp08

{

internal class Program

{

static string deploymentName = Environment.GetEnvironmentVariable("AI:OpenAI:DeploymentName")!;

static string embeddingDeploymentName = Environment.GetEnvironmentVariable("AI:OpenAI:EmbeddingDeploymentName")!;

static string endpoint = Environment.GetEnvironmentVariable("AI:OpenAI:Endpoint")!;

static string apiKey = Environment.GetEnvironmentVariable("AI:OpenAI:APIKey")!;

static async Task Main(string[] args)

{

//Experimental

#pragma warning disable SKEXP0001, SKEXP0010, SKEXP0020, SKEXP0050

//Initialize Semantic Kernel

var builder = Kernel.CreateBuilder();

builder.AddAzureOpenAIChatCompletion(deploymentName, endpoint, apiKey);

var kernel = builder.Build();

//Initialize Memory Builder

var memoryBuilder = new MemoryBuilder()

.WithMemoryStore(await SqliteMemoryStore.ConnectAsync("data\\rag-data.db"))

.WithAzureOpenAITextEmbeddingGeneration(

deploymentName: embeddingDeploymentName,

endpoint: endpoint,

apiKey: apiKey

);

var memory = memoryBuilder.Build();

var collectionName = "microsoft-news";

var collections = await memory.GetCollectionsAsync();

if (!collections.Contains(collectionName))

{

// Download a documents and add all of its contents to our chat

var articleList = new List<string>

{

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/relationships-between-language-and-library.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/version-update-considerations.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-version-history.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-11.md",

"https://raw.githubusercontent.com/dotnet/roslyn/main/docs/compilers/CSharp/Compiler%20Breaking%20Changes%20-%20DotNet%207.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-12.md",

"https://raw.githubusercontent.com/dotnet/roslyn/main/docs/compilers/CSharp/Compiler%20Breaking%20Changes%20-%20DotNet%208.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/csharp/whats-new/csharp-13.md",

"https://raw.githubusercontent.com/dotnet/roslyn/main/docs/compilers/CSharp/Compiler%20Breaking%20Changes%20-%20DotNet%209.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/overview.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/runtime.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/sdk.md",

"https://raw.githubusercontent.com/dotnet/docs/main/docs/core/whats-new/dotnet-8/containers.md"

};

using (var httpClient = new HttpClient())

{

var allParagraphs = new List<string>();

foreach (var article in articleList)

{

var content = await httpClient.GetStringAsync(article);

var lines = TextChunker.SplitPlainTextLines(content, 64);

var paragraphs = TextChunker.SplitPlainTextParagraphs(lines, 512);

allParagraphs.AddRange(paragraphs);

}

for (var i = 0; i < allParagraphs.Count; i++)

{

await memory.SaveInformationAsync(collectionName, allParagraphs[i], $"paragraph[{i}]");

}

}

}

else

{

Console.WriteLine($"Found '{collectionName}' in RAG database");

}

//Create new chat

var chatService = kernel.GetRequiredService<IChatCompletionService>();

var chat = new ChatHistory(

systemMessage: "You are an AI assistant that helps people find information."

);

string question;

var responseBuilder = new StringBuilder();

var contextBuilder = new StringBuilder();

//Question and Answer loop

while (true)

{

Console.Write("Me: ");

question = Console.ReadLine()!;

await foreach (var result in memory.SearchAsync(collectionName, question, limit: 3))

{

contextBuilder.AppendLine(result.Metadata.Text);

}

var contextToRemove = -1;

if (contextBuilder.Length > 0)

{

contextBuilder.Insert(0, "Here's some additional information: ");

contextToRemove = chat.Count;

chat.AddUserMessage(contextBuilder.ToString());

}

chat.AddUserMessage(question);

responseBuilder.Clear();

Console.Write("Mine Copilot: ");

await foreach (var message in chatService.GetStreamingChatMessageContentsAsync(chat, null, kernel))

{

Console.Write(message);

responseBuilder.Append(message.Content);

}

Console.WriteLine();

chat.AddAssistantMessage(responseBuilder.ToString());

if (contextToRemove >= 0)

{

chat.RemoveAt(contextToRemove);

}

Console.WriteLine();

}

}

}

}

Now, when we run it, the first invocation will still index everything, but after that, the data will already be indexed:

and subsequent invocations are able to simply use it.

While Sqlite is a fantastic tool, it is not specifically optimized for performing these types of searches. In fact, the code for this SqliteMemoryStore in SK merely enumerates the entire database and performs a `CosineSimilarity“ check on each entry.

// from: https://github.com/microsoft/semantic-kernel/blob/9264b3e0b42e184b7e9e8b2a073d8a721c4af92a/dotnet/src/Connectors/Connectors.Memory.Sqlite/SqliteMemoryStore.cs#L135

await foreach (var record in this.GetAllAsync(collectionName, cancellationToken).ConfigureAwait(false))

{

if (record is not null)

{

double similarity = TensorPrimitives.CosineSimilarity(embedding.Span, record.Embedding.Span);

...

}

}

For real scale and the ability to share data across multiple frontends, we need a dedicated ‘vector database’ designed for storing and searching embeddings. There are many such vector databases available now, including Azure AI Search, Chroma, Milvus, Pinecone,

Qdrant, Weaviate, and many more… We can easily set one of these up, change our `WithMemoryStore“ call to use the appropriate connector, and we are ready to go. Let’s proceed with that. For this example, I have chosen Azure AI Search.

I add the relevant Semantic Kernel “connector” to my project:

dotnet add package Microsoft.SemanticKernel.Connectors.AzureAISerach --prerelease

and then add a couple of lines:

static string azureAISearchEndpoint = Environment.GetEnvironmentVariable("AI:AzureAISearch:Endpoint")!;

static string azureAISearchApiKey = Environment.GetEnvironmentVariable("AI:AzureAISearch:APIKey")!;

change from:

.WithMemoryStore(await SqliteMemoryStore.ConnectAsync("data\\rag-data.db"))

to:

.WithMemoryStore(new AzureAISearchMemoryStore(azureAISearchEndpoint, azureAISearchApiKey))

And that’s it! The application works as before but much faster.

Enhancing your Semantic integration: use the Semantic best practices and considerations

Wow! Clearly, I have left out many crucial details that any real application would need to address. For instance, how should the data being indexed be cleaned, normalized, and chunked? How should errors be managed? How can we limit the amount of data sent with each request, such as restricting chat history or the size of the found embeddings? How to make the application more secure (API Key vs Managed Identity)? Which service is the best for storing all the information?

And there are many other considerations, including making the UI much more attractive than my basic Console.WriteLine calls. Despite these missing details, I hope it is evident that you can start integrating this kind of functionality into your applications right away.

Also read: Introduction to Python Pandas Libraries

- 1. Building a console-based .NET chat app with solutions like Semantic Kernel

- 2. How to get started with Semantic Kernel SDK. Learn how to use it

- 3. Leveraging Semantic functions: reusable prompts, dynamic input handling, and plugins

- 4. Does LLM have memory? How to use Semantic Kernel to overcome statelessness in chat agents

- 5. Does LLM know everything? Connectors and RAG with Semantic plugins and native functions

- 6. Storing memories

- 7. Summary